habana_frameworks.mediapipe.fn.Contrast

habana_frameworks.mediapipe.fn.Contrast¶

- Class:

habana_frameworks.mediapipe.fn.Contrast(**kwargs)

- Define graph call:

__call__(input, contrast_tensor)

- Parameter:

input - Input tensor to operator. Supported dimensions: minimum = 1, maximum = 4. Supported data types: UINT8, UINT16.

(optional) contrast_tensor - Tensor containing contrast value for each image in the batch. Supported dimensions: minimum = 1, maximum = 1. Supported data type: FLOAT32.

Description:

This operator is used to change the contrast of the image. It scales each pixel in the image data with contrast scale.

If contrast_tensor is not provided then scalar value is used for all the images. The equation for obtaining output pixel value is:

output = input * contrast_scale + (1 - contrast_scale) * 128.

- Supported backend:

HPU

Keyword Arguments

kwargs |

Description |

|---|---|

contrast_scale |

Contrast scale factor required for pixel manipulation.

|

dtype |

Output data type.

|

Note

Input/output tensor must be of the same data type.

Input1 and output tensor could be in any layout but both have to be same.

Input2 is one dimensional tensor of size N.

Contrast value can be provided either as scalar or 1D tensor.

Example: Contrast Operator

The following code snippet shows usage of Contrast operator:

from habana_frameworks.mediapipe import fn

from habana_frameworks.mediapipe.mediapipe import MediaPipe

from habana_frameworks.mediapipe.media_types import imgtype as it

from habana_frameworks.mediapipe.media_types import dtype as dt

import matplotlib.pyplot as plt

import os

g_display_timeout = os.getenv("DISPLAY_TIMEOUT") or 5

# Create MediaPipe derived class

class myMediaPipe(MediaPipe):

def __init__(self, device, queue_depth, batch_size, num_threads, op_device, dir, img_h, img_w):

super(

myMediaPipe,

self).__init__(

device,

queue_depth,

batch_size,

num_threads,

self.__class__.__name__)

self.input = fn.ReadImageDatasetFromDir(shuffle=False,

dir=dir,

format="jpg",

device="cpu")

# WHCN

self.decode = fn.ImageDecoder(device="hpu",

output_format=it.RGB_P,

resize=[img_w, img_h])

self.contrast = fn.Contrast(contrast_scale=2.5,

dtype=dt.UINT8,

device=op_device)

# WHCN -> CWHN

self.transpose = fn.Transpose(permutation=[2, 0, 1, 3],

tensorDim=4,

dtype=dt.UINT8,

device=op_device)

def definegraph(self):

images, labels = self.input()

images = self.decode(images)

images = self.contrast(images)

images = self.transpose(images)

return images, labels

def display_images(images, batch_size, cols):

rows = (batch_size + 1) // cols

plt.figure(figsize=(10, 10))

for i in range(batch_size):

ax = plt.subplot(rows, cols, i + 1)

plt.imshow(images[i])

plt.axis("off")

plt.show(block=False)

plt.pause(g_display_timeout)

plt.close()

def run(device, op_device):

batch_size = 6

queue_depth = 2

num_threads = 1

img_width = 200

img_height = 200

base_dir = os.environ['DATASET_DIR']

dir = base_dir + "/img_data/"

columns = 3

# Create MediaPipe object

pipe = myMediaPipe(device, queue_depth, batch_size,

num_threads, op_device, dir,

img_height, img_width)

# Build MediaPipe

pipe.build()

# Initialize MediaPipe iterator

pipe.iter_init()

# Run MediaPipe

images, labels = pipe.run()

def as_cpu(tensor):

if (callable(getattr(tensor, "as_cpu", None))):

tensor = tensor.as_cpu()

return tensor

# Copy data to host from device as numpy array

images = as_cpu(images).as_nparray()

labels = as_cpu(labels).as_nparray()

del pipe

# Display images

display_images(images, batch_size, columns)

if __name__ == "__main__":

dev_opdev = {'mixed': ['hpu'],

'legacy': ['hpu']}

for dev in dev_opdev.keys():

for op_dev in dev_opdev[dev]:

run(dev, op_dev)

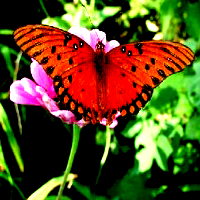

Images with Changed Contrast 1

- 1

Licensed under a CC BY SA 4.0 license. The images used here are taken from https://data.caltech.edu/records/mzrjq-6wc02.