habana_frameworks.mediapipe.fn.Pad

habana_frameworks.mediapipe.fn.Pad¶

- Class:

habana_frameworks.mediapipe.fn.Pad(**kwargs)

- Define graph call:

__call__(input)

- Parameter:

input - Input tensor to operator. Supported dimensions: minimum = 1, maximum = 5. Supported data types: INT8, UINT8, BFLOAT16, FLOAT32.

Description:

Pads a tensor with zeros or any numbers. Output tensor size is calculated using the following formula:

For 4D tensors:

out_sizes[0] = input_sizes[0] + pads[0] + pads[4]

out_sizes[1] = input_sizes[1] + pads[1] + pads[5]

out_sizes[2] = input_sizes[2] + pads[2] + pads[6]

out_sizes[3] = input_sizes[3] + pads[3] + pads[7]

For 5D tensors:

out_sizes[0] = input_sizes[0] + pads[0] + pads[5]

out_sizes[1] = input_sizes[1] + pads[1] + pads[6]

out_sizes[2] = input_sizes[2] + pads[2] + pads[7]

out_sizes[3] = input_sizes[3] + pads[3] + pads[8]

out_sizes[4] = input_sizes[4] + pads[4] + pads[9]

- Supported backend:

HPU

Keyword Arguments

kwargs |

Description |

|---|---|

mode |

Specifies padding mode.

|

value |

A constant to pad the input.

|

pads[10] |

The paddings indicate how many constant values to add before and after the input in all the dimensions given as pad_before[0]…pad_before[4], pad_after[0] … pad_after[4].

|

dtype |

Output data type.

|

Note

Input/Output tensors datatype should match operation data type.

In PAD_MODE_SYMMETRIC, pad along a dim should not be greater than corresponding dim size.

In PAD_MODE_REFLECT, pad along a dim should not be greater or equal to corresponding dim size.

Negative pads are only supported in Constant mode.

“PAD_MODE_EDGE” mode is ONNX compliant.

Example: Pad Operator

The following code snippet shows usage of Pad operator:

from habana_frameworks.mediapipe import fn

from habana_frameworks.mediapipe.mediapipe import MediaPipe

from habana_frameworks.mediapipe.media_types import imgtype as it

from habana_frameworks.mediapipe.media_types import dtype as dt

import matplotlib.pyplot as plt

import os

g_display_timeout = os.getenv("DISPLAY_TIMEOUT") or 5

# Create MediaPipe derived class

class myMediaPipe(MediaPipe):

def __init__(self, device, queue_depth, batch_size, num_threads, op_device, dir, img_h, img_w):

super(

myMediaPipe,

self).__init__(

device,

queue_depth,

batch_size,

num_threads,

self.__class__.__name__)

self.input = fn.ReadImageDatasetFromDir(shuffle=False,

dir=dir,

format="jpg",

device="cpu")

# WHCN

self.decode = fn.ImageDecoder(device="hpu",

output_format=it.RGB_P,

resize=[img_w, img_h])

self.pad = fn.Pad(pads=[60, 30, 0, 0, 60, 30, 0, 0],

mode=0,

value=0.0,

dtype=dt.UINT8,

device=op_device)

# WHCN -> CWHN

self.transpose = fn.Transpose(permutation=[2, 0, 1, 3],

tensorDim=4,

dtype=dt.UINT8,

device=op_device)

def definegraph(self):

images, labels = self.input()

images = self.decode(images)

images = self.pad(images)

images = self.transpose(images)

return images, labels

def display_images(images, batch_size, cols):

rows = (batch_size + 1) // cols

plt.figure(figsize=(10, 10))

for i in range(batch_size):

ax = plt.subplot(rows, cols, i + 1)

plt.imshow(images[i])

plt.axis("off")

plt.show(block=False)

plt.pause(g_display_timeout)

plt.close()

def run(device, op_device):

batch_size = 6

queue_depth = 2

num_threads = 1

img_width = 200

img_height = 200

base_dir = os.environ['DATASET_DIR']

dir = base_dir + "/img_data/"

columns = 3

# Create MediaPipe object

pipe = myMediaPipe(device, queue_depth, batch_size,

num_threads, op_device, dir,

img_height, img_width)

# Build MediaPipe

pipe.build()

# Initialize MediaPipe iterator

pipe.iter_init()

# Run MediaPipe

images, labels = pipe.run()

def as_cpu(tensor):

if (callable(getattr(tensor, "as_cpu", None))):

tensor = tensor.as_cpu()

return tensor

# Copy data to host from device as numpy array

images = as_cpu(images).as_nparray()

del pipe

# Display shape

display_images(images, batch_size, columns)

if __name__ == "__main__":

dev_opdev = {'mixed': ['hpu'],

'legacy': ['hpu']}

for dev in dev_opdev.keys():

for op_dev in dev_opdev[dev]:

run(dev, op_dev)

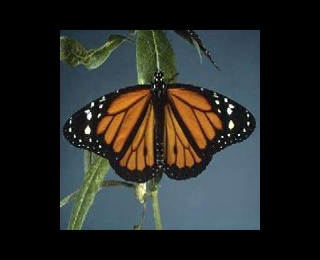

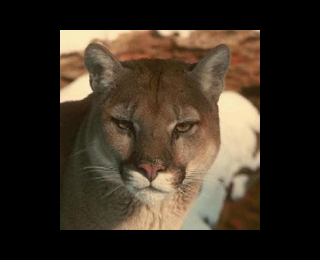

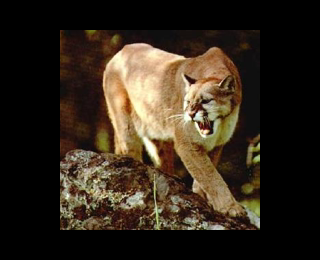

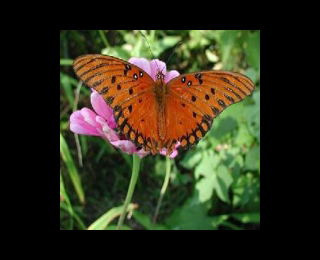

Images with Padding by Constant Value 1

- 1

Licensed under a CC BY SA 4.0 license. The images used here are taken from https://data.caltech.edu/records/mzrjq-6wc02.