habana_frameworks.mediapipe.fn.Flip

habana_frameworks.mediapipe.fn.Flip¶

- Class:

habana_frameworks.mediapipe.fn.Flip(**kwargs)

- Define graph call:

__call__(input)

- Parameter:

input - Input tensor to operator. Supported dimensions: minimum = 4, maximum = 4. Supported data types: UINT8, UINT16.

Description:

This operator flips selected dimensions (horizontal, vertical, depthwise).

- Supported backend:

HPU

Keyword Arguments

kwargs |

Description |

|---|---|

horizontal |

Flip horizontal dimension. 0 = Do not flip horizontal axis. 1 = Flip horizontal axis.

|

vertical |

Flip vertical dimension. 0 = Do not flip vertical axis. 1 = Flip vertical axis.

|

depthwise |

Flip depthwise dimension. 0 = Do not flip depth axis. 1 = Flip depth axis.

|

dtype |

Output data type.

|

Note

Input tensor and output tensor should be in WHCN layout. W being the fastest changing dimension.

At least one flip parameter should be 1.

Example: Flip Operator

The following code snippet shows usage of Flip operator:

from habana_frameworks.mediapipe import fn

from habana_frameworks.mediapipe.mediapipe import MediaPipe

from habana_frameworks.mediapipe.media_types import imgtype as it

from habana_frameworks.mediapipe.media_types import dtype as dt

import matplotlib.pyplot as plt

import numpy as np

import os

g_display_timeout = os.getenv("DISPLAY_TIMEOUT") or 5

# Create MediaPipe derived class

class myMediaPipe(MediaPipe):

def __init__(self, device, queue_depth, batch_size, num_threads, op_device, dir, img_h, img_w):

super(

myMediaPipe,

self).__init__(

device,

queue_depth,

batch_size,

num_threads,

self.__class__.__name__)

self.input = fn.ReadImageDatasetFromDir(shuffle=False,

dir=dir,

format="jpg",

device="cpu")

# WHCN

self.decode = fn.ImageDecoder(device="hpu",

output_format=it.RGB_P,

resize=[img_w, img_h])

self.flip = fn.Flip(horizontal=1,

vertical=0,

depthwise=0,

dtype=dt.UINT8,

device=op_device)

# WHCN -> CWHN

self.transpose = fn.Transpose(permutation=[2, 0, 1, 3],

tensorDim=4,

dtype=dt.UINT8,

device=op_device)

def definegraph(self):

inp, labels = self.input()

inp = self.decode(inp)

images = self.flip(inp)

inp = self.transpose(inp)

images = self.transpose(images)

return images, inp, labels

def display_images(images, batch_size, cols):

rows = (batch_size + 1) // cols

plt.figure(figsize=(10, 10))

for i in range(batch_size):

ax = plt.subplot(rows, cols, i + 1)

plt.imshow(images[i])

plt.axis("off")

plt.show(block=False)

plt.pause(g_display_timeout)

plt.close()

def run(device, op_device):

batch_size = 6

queue_depth = 2

num_threads = 1

img_width = 200

img_height = 200

base_dir = os.environ['DATASET_DIR']

dir = base_dir + "/img_data/"

columns = 3

# Create MediaPipe object

pipe = myMediaPipe(device, queue_depth, batch_size,

num_threads, op_device, dir,

img_height, img_width)

# Build MediaPipe

pipe.build()

# Initialize MediaPipe iterator

pipe.iter_init()

# Run MediaPipe

images, inp, labels = pipe.run()

def as_cpu(tensor):

if (callable(getattr(tensor, "as_cpu", None))):

tensor = tensor.as_cpu()

return tensor

# Copy data to host from device as numpy array

images = as_cpu(images).as_nparray()

inp = as_cpu(inp).as_nparray()

labels = as_cpu(labels).as_nparray()

del pipe

# Display images

display_images(images, batch_size, columns)

return inp, images

def compare_ref(inp, out):

ref = np.flip(inp, axis=2)

if np.array_equal(ref, out) == False:

raise ValueError(f"Mismatch w.r.t ref")

if __name__ == "__main__":

dev_opdev = {'mixed': ['hpu'],

'legacy': ['hpu']}

for dev in dev_opdev.keys():

for op_dev in dev_opdev[dev]:

inp, out = run(dev, op_dev)

compare_ref(inp, out)

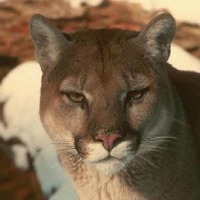

Horizontally Flipped Images 1

- 1

Licensed under a CC BY SA 4.0 license. The images used here are taken from https://data.caltech.edu/records/mzrjq-6wc02.