Bandwidth Test Plugins Design, Switches and Parameters

On this Page

Bandwidth Test Plugins Design, Switches and Parameters¶

This section describes plugin specific switches only. Common plugin switches and parameters are described in hl_qual Common Plugin Switches and Parameters.

Note

Before running the plugin tests described in this document, make sure to set

export __python_cmd=python3environment variableIt is recommended to run PCI parallel mode testing while using PCI Bandwidth tests and only on bare metal machines. Running the test on VM fails due to faulty PCI tree reflection.

Gaudi 3 Memory Bandwidth Plugin Design Consideration and Responsibilities¶

The low level host-to-memory bandwidth test plugin is an hl_thunk based DMA bandwidth measurement test. The test evaluates PCI bandwidth and is built on top of the hlthunk API - a lower level API wrapping the Intel Gaudi driver.

Memory Bandwidth Sub-Test Modes¶

PCI Host-to-Memory Communication Tests

HOST ==> HBM |

HBM ==> HOST |

HBM <==> HOST - bidirectional test |

Device Memory to Memory Test

HBM ==> HBM |

Using single and multiple DMA channels |

Serial and Parallel Running Mode Considerations¶

Since the PCI link is common to all devices, running the test on multiple devices in parallel mode may result in reduced bandwidth results. To get the most accurate bandwidth result, run the PCI test in serial mode. If you are using bare metal setup, you can run the test either in serial or parallel mode.

Memory to memory tests can be run in serial and parallel mode. However, running the tests in different modes is crucial since the memory test takes longer to run, and running the test on multiple devices can decrease the overall execution time. Split the test into two:

Running memory test in parallel mode example:

./hl_qual -gaudi3 -c all -rmod parallel -mb -memOnly

Running PCI test in serial mode example:

./hl_qual -gaudi3 -c all -rmod serial -mb -b -pciOnly

Memory Bandwidth Plugin Test - Pass/Fail Criteria¶

The PCI test pass/fail criteria is similar to the PCI load test pass/fail criteria. The following lists some assumptions made in the test plugin code:

The full PCI path between HOST CPU and device with predefined Gen-5 setup.

The full PCI path between HOST CPU and device is composed out of 16 lanes.

The below pass criteria numbers are precalculated for a setup with 16 lanes and Gen-5 compatibility:

Unidirectional download from host to device with an expected bandwidth of 39.4034 GB/s, assuming CPU with Gen-5 PCI link.

Unidirectional upload from device to host with an expected bandwidth of 38.8405 GB/s, assuming CPU with Gen-5 PCI link.

Bidirectional test which calculates the bandwidth on a simultaneous upload and download with an expected bandwidth of 80.3605 GB/s.

The calculated pass/fail criteria threshold is theoretical, hence the PCI test has a 10% allowable degradation. Below that, the plugin fails the test run.

Gaudi 3 Memory Bandwidth Test Plugin Switches and Parameters¶

hl_qual -gaudi3 -c <pci bus id> -rmod <serial | parallel> [-dis_mon] [-mon_cfg <monitor INI path>] [-b] [-memOnly | -pciOnly] -mb [-fsp] [-gen <gen modifier>] [-lanes <lane_specifier>]

Switches and Parameters |

Description |

|---|---|

|

Test selector. |

|

Activates bidirectional bandwidth testing. |

|

Only activates device memory test:

|

|

Only activates PCI tests:

|

|

Extracts PCIe tree information to the hl_qual report. It can be run only in serial mode and on a bare metal machine. |

|

Specifies the expected PCI device generation. There are two applicable modifiers:

If this switch is omitted, it is assumed that the system under test (HOST + Gaudi device) is a Gen-5 PCI data path. |

|

Sets up the applicable amount of PCI link lanes. It can be either 8 or 16. |

The below command line executes the test in serial mode, and sets a pass/fail criteria for transfer speed:

./hl_qual -gaudi3 -c all -rmod serial -mb -b -dis_mon

Gaudi 2 Memory Bandwidth Plugin Design Consideration and Responsibilities¶

The memory bandwidth test plugin is an hl_thunk based DMA bandwidth measurement test. The test includes PCI bandwidth and a variation of memory transfer tests such as HBM and SRAM. The tests are built on top of the hlthunk API - a lower level API wrapping the Intel Gaudi driver.

Memory Bandwidth Sub-Test Modes¶

PCI Host-to-Device Communication Tests

HOST ==> HBM |

HBM ==> HOST |

HBM <==> HOST - bidirectional test |

HOST ==> SRAM |

SRAM ==> HOST |

SRAM <==> HOST - bidirectional test |

Device Memory to Memory Tests

SRAM ==> HBM |

Using single and multiple DMA channels |

HBM ==> SRAM |

Using single and multiple DMA channels |

HBM ==> HBM |

Using single and multiple DMA channels |

Serial and Parallel Running Mode Considerations¶

Since the PCI link is common to all devices, running PCI HOST/DEViCE test variants on multiple devices in parallel mode may result in reduced bandwidth results. To get the most accurate bandwidth result, run the PCI test in serial mode. If you are using a bare metal setup, you can run the test either in serial or parallel mode.

Memory to memory tests can be run in serial and parallel mode. However, running the tests in different modes is crucial since the memory test takes longer to run, and running the test on multiple devices can decrease the overall execution time. Split the test into two:

Running memory test in parallel mode example:

./hl_qual -gaudi2 -c all -rmod parallel -mb -memOnly

Running PCI test in serial mode example:

./hl_qual -gaudi2 -c all -rmod serial -mb -b -pciOnly -sramOnly

Memory Bandwidth Test - Pass/Fail Criteria¶

The pass/fail criteria depends on the sub-test type executed. Memory to memory tests pass/fail criteria are pre-calibrated. The PCI sub-test is similar to the PCI load test pass/fail criteria. The following lists some assumptions made in the test plugin code:

The full PCI path between HOST CPU and device with predefined Gen-3 setup.

The full PCI path between HOST CPU and device is composed out of 16 lanes.

When conducting a test on a different host setup, you can change these assumptions by using the applicable test switches. This causes the test plugin to change the pass/fail criteria accordingly.

The below pass criteria numbers are precalculated for a setup with 16 lanes and Gen-3 compatibility:

Unidirectional download from host to device with an expected bandwidth of 11.9GB/s, assuming CPU with Gen-3 PCI link.

Unidirectional upload from device to host with an expected bandwidth of 12.9GB/s, assuming CPU with Gen-3 PCI link.

Bidirectional test which calculates the bandwidth on a simultaneous upload and download with an expected bandwidth of 19.5GB/s.

The calculated pass/fail criteria threshold is theoretical, hence the PCI test has a 10% allowable degradation. Below that, the plugin fails the test run.

Gaudi 2 Memory Bandwidth Test Plugin Switches and Parameters¶

hl_qual -gaudi2 -c <pci bus id> -rmod <serial | parallel> [-dis_mon] [-mon_cfg <monitor INI path>]

[-b] [-memOnly | -pciOnly | -sramOnly | -pciall] -mb [-fsp] [-gen <gen modifier>] [-lanes <lane_specifier>]

Switches and Parameters |

Description |

|---|---|

|

Memory bandwidth test selector. |

|

Activates bidirectional bandwidth tests. |

|

Only activates device memory tests:

|

|

Only activates PCI tests:

|

|

Only activates PCI tests:

|

|

Activates all PCI tests listed in both |

|

Extracts PCIe tree information to the hl_qual report. |

|

Specifies the expected PCI device generation. There are two applicable modifiers:

If this switch is omitted, it is assumed that the system under test (HOST + Gaudi device) is a Gen-4 PCI data path. |

|

Sets up the applicable amount of PCI link lanes. It can be either 8 or 16. |

The below command line executes all of the memory bandwidth tests listed above in serial mode, and sets a pass/fail criteria for transfer speed:

./hl_qual -gaudi2 -c all -rmod serial -dis_mon -b

PCI Bandwidth Test Design Considerations and Requirements¶

The test runs are partitioned into three sub-tests: upload, download and bidirectional. The PCI bandwidth test plugin measures the PCI bandwidth when moving data from the host to/from the device HBM memory. The hl_qual can run this test using the two running modes - serial and parallel.

Serial Mode¶

In this testing mode, each device should achieve maximal bandwidth.

Running the test in serial mode imposes certain restrictions on the PCI test time duration.

For example, if you run the test for 20 seconds on eight Gaudi 2 cards,

the actual test duration is Total_test duration = 20 * 3 * 8 = 240 seconds.

To average the bandwidth calculation, 10-20 seconds is sufficient (about 200 GB for upload/download). Running the test beyond 1 minute increases the test duration.

Parallel Mode¶

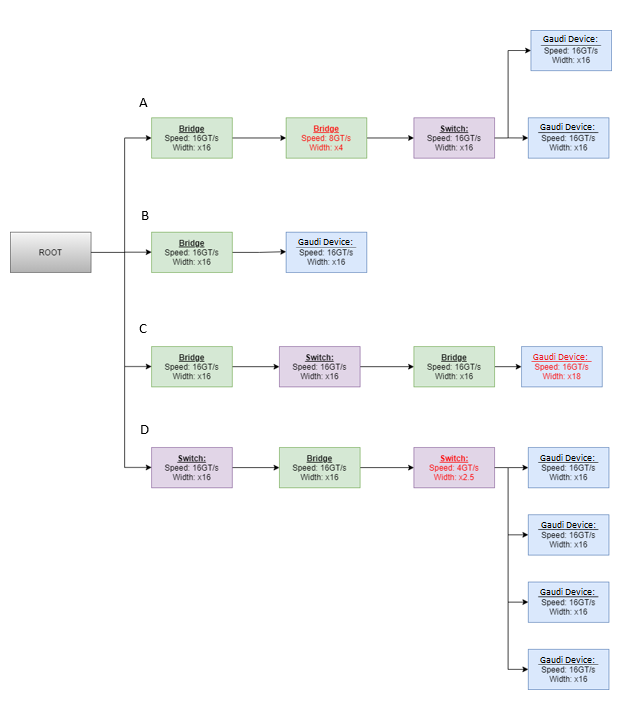

Use Linux PCIe information for this testing mode. In this scenario, the test compares the card’s performance against the switch/bridge bottle-neck in cards connectivity paths.

The example below illustrates how pass/fail criteria is determined in this mode: For each path <P> to Gaudi devices <D1, D2, …> in the PCIe tree:

Extract the bottle-neck <B> switch/bridge/device in P.

If ( BandWidth(B) <= Sum(BandWidth(D1) + BandWidth(D2) + …) ) == True, then PASS else FAIL.

For example:

Figure 18 Example: PCIe tree¶

Path A : Pass if BandWidth(Second Bridge) <= Sum(BandWidth(D1) + BandWidth(D2)).

Path B : Pass if BandWidth(first Bridge) <= Sum(BandWidth(D1)).

Path C : Pass if BandWidth(D1) <= Sum(BandWidth(D1)).

Path D : Pass if BandWidth(Second Switch) <= Sum(BandWidth(D1) + BandWidth(D2) + BandWidth(D3) + BandWidth(D4)).

Note

The keyword BandWidth refers to the measured bandwidth on the switch/device.

PCI Bandwidth Test - Pass/Fail Criteria¶

The PCI test plugin runs multiple tests to check the download and upload bandwidth with the following pass/fail criteria:

Unidirectional download from host to device with an expected bandwidth of 39.4034 GB/s assuming CPU with Gen-5 PCI link.

Unidirectional upload from device to host with an expected bandwidth of 38.8405 GB/s assuming CPU with Gen-5 PCI link.

Bidirectional test which calculates the bandwidth on a simultaneous upload and download with an expected bandwidth of 80GB/s assuming CPU with Gen-5 PCI link.

Unidirectional download from host to device with an expected bandwidth of 20.9GB/s assuming CPU with Gen-4 PCI link.

Unidirectional upload from device to host with an expected bandwidth of 23.1GB/s assuming CPU with Gen-4 PCI link.

Bidirectional test which calculates the bandwidth on a simultaneous upload and download with an expected bandwidth of 34.6GB/s assuming CPU with Gen-4 PCI link.

The calculated pass/fail criteria threshold is theoretical, hence the PCI test has a 10% allowable degradation.

PCI Bandwidth Test Plugin Switches and Parameters¶

hl_qual -gaudi3 -c <pci bus id> [-t <time in seconds>] -rmod <serial | parallel> [-dis_mon] [-mon_cfg <monitor INI path>]

-p [-b] [-size <size in bytes>] [-fsp] [-gen <gen modifier>] [-lanes <lane_specifier>]

Switches and Parameters |

Description |

|---|---|

|

PCI test plugin selector. |

|

Enables bidirectional PCI test, simultaneous upload and download test. By default, if this switch is not specified, the test plugin performs only two upload and download bandwidth tests. When this switch is omitted, the default behavior is to skip the bidirectional test and perform an upload and download bandwidth check. |

|

Upload/Download buffer size specification. The minimal buffer size must be 1073741824. If this switch is omitted, the default upload/download buffer size is 1GB. ./hl_qual -gaudi3 -c all -rmod serial -t 20 -p

-b -size 2048000000

|

|

PCI test duration in seconds. Applicable range: 10-3600. If this switch is omitted, the default value is 10 seconds. |

|

Extracts PCIe tree information to the hl_qual report. It can be run only in serial mode and on a bare metal machine. |

|

Specifies the expected PCI device generation. There are two applicable modifiers:

If this switch is omitted, it is assumed that the system under test (HOST + Gaudi device) is a Gen-5 PCI data path. |

|

Sets up the applicable amount of PCI link lanes. It can be either 8 or 16. |

./hl_qual -gaudi3 -c all -rmod serial -dis_mon -t 120 -p -b

hl_qual -gaudi2 -c <pci bus id> [-t <time in seconds>] -rmod <serial | parallel> [-dis_mon] [-mon_cfg <monitor INI path>]

-p [-b] [-size <size in bytes>] [-fsp] [-gen <gen modifier>] [-lanes <lane_specifier>]

Switches and Parameters |

Description |

|---|---|

|

PCI test plugin selector. |

|

Enables bidirectional PCI test, simultaneous upload and download test. By default, if this switch is not specified, the test plugin performs only two upload and download bandwidth tests. When this switch is omitted, the default behavior is to skip the bidirectional test and perform an upload and download bandwidth check. |

|

Upload/Download buffer size specification. The minimal buffer size must be 1073741824. If this switch is omitted, the default upload/download buffer size is 1GB. ./hl_qual -gaudi3 -c all -rmod serial -t 20 -p

-b -size 2048000000

|

|

PCI test duration in seconds. Applicable range: 10-3600. If this switch is omitted, the default value is 10 seconds. |

|

Extracts PCIe tree information to the hl_qual report. It can be run only in serial mode and on a bare metal machine. |

|

Specifies the expected PCI device generation. There are two applicable modifiers:

If this switch is omitted, it is assumed that the system under test (HOST + Gaudi device) is a Gen-4 PCI data path. |

|

Sets up the applicable amount of PCI link lanes. It can be either 8 or 16. |

./hl_qual -gaudi2 -c all -rmod serial -dis_mon -t 120 -p -b

Note

Since the PCI bandwidth test plugin conducts up to three sub-tests (upload, download and bidirectional), the duration given in the command line should be multiplied by three.

For systems with malfunctioning PCI link (such as low bandwidth), the test duration is different from what was specified by the

-toption, as the PCI plugin calculates the number of test iterations based on the expected bandwidth.