Congestion Test

On this Page

Congestion Test¶

The below tests are performed using HCCL demos.

Note

Basic users testing for connectivity and basic performance can use all the collectives including HCCL allreduce, allgather, reducescatter, all2all. Testing for optimal switch configurations as well as incast congestion scenarios is for advanced users only. Use the –ranks_list feature along with send/recv tests.

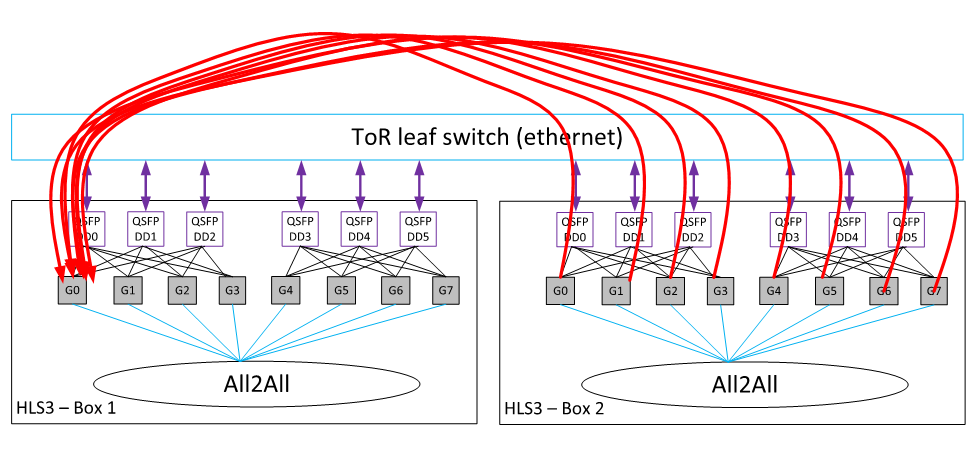

Incast Congestion Inside a Single Leaf Switch¶

Test1 (8:1 congestion): In this test, we create 8:1 incast congestion where G0 of HLS-3 Box1 is the root and all Gaudis from the second box simultaneously send data to G0 in the first box.

PASS criteria: We should see that each of the Gaudis in the second box should get 75/8 =~ 9GB/s bandwidth. Switch monitors: We should see Pause frames in the switch and NO packet drops in the leaf switch. Please note that this test should be done on every leaf switch and preferably using all boxes within the same leaf switch. We should also monitor the packet drops (psn_out_of_range and psn_out_of_sequence) on the Gaudi side to ensure that there are not many packet drops.

# 1st box:

cd $DEMOS_ROOT/gaudi/hccl_test; HLS_ID=0

HCCL_COMM_ID=10.111.233.253:5555 python3 run_hccl_demo.py -clean --test

send_recv --nranks 16 --loop 1000 --node_id 0 --size 16m

--ranks_per_node 8 --ranks_list

“0,8,8,0,1,8,8,1,2,8,8,2,3,8,8,3,4,8,8,4,5,8,8,5,6,8,8,6,7,8,8,7”

# 2nd box:

cd $DEMOS_ROOT/gaudi/hccl_test; HLS_ID=0

HCCL_COMM_ID=10.111.233.253:5555 python3 run_hccl_demo.py -clean --test

send_recv --nranks 16 --loop 1000 --node_id 1 --size 16m

--ranks_per_node 8 --ranks_list

“0,8,8,0,1,8,8,1,2,8,8,2,3,8,8,3,4,8,8,4,5,8,8,5,6,8,8,6,7,8,8,7”

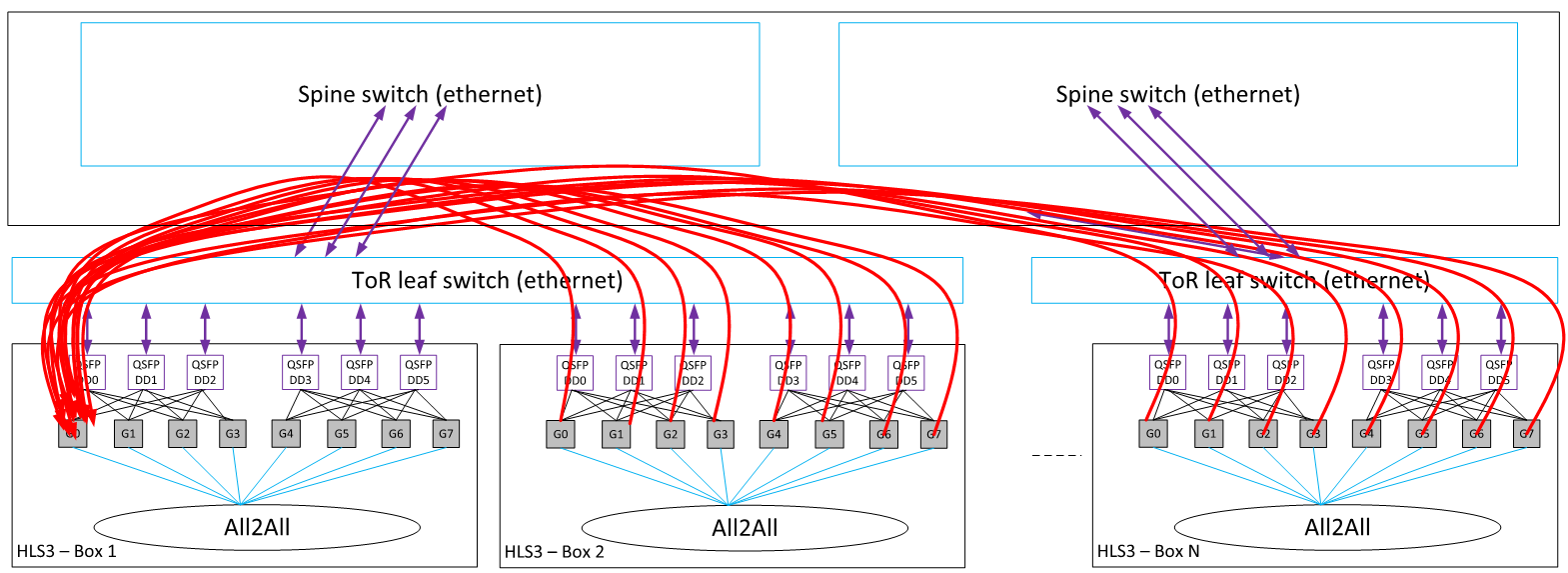

Incast Congestion Across Leaf and Spine Switch¶

16:1 congestion: In this test, we need to include more than one leaf switch so that we can test for PFC functionality on both leaf and spine. As you can see below, the two boxes on two different leaf switches target another box (G0 of HLS3-Box0). In this case, all Gaudis (say rank 8-24) would simultaneously send data to G0 of Box0.

PASS criteria: We should see that each of the Gaudis in the second box should get 75/16=~ 4GB/s bandwidth. Switch monitors: We should see Pause frames in the leaf and spine switch and should ideally NOT see any drops in spine and leaf switches. In very rare cases, packet drops should be very minimal. In case large packet drops are observed, update the “headroom” settings in the switch until you get the number of packet drops close to 0. We should also monitor the packet drops (psn_out_of_range and psn_out_of_sequence) on the Gaudi side to ensure that there are not many packet drops.

# 1st box:

cd $DEMOS_ROOT/gaudi/hccl_test; HLS_ID=0

HCCL_COMM_ID=10.111.233.253:5555 python3 run_hccl_demo.py -clean --test

send_recv --nranks 24 --loop 1000 --node_id 0 --size 16m

--ranks_per_node 8 --ranks_list

"0,16,16,0,8,16,16,8,1,16,16,1,9,16,16,9,2,16,16,2,10,16,16,10,3,16,16,3,11,16,16,11,4,16,16,4,12,16,16,12,5,16,16,5,13,16,16,13,6,16,16,6,14,16,16,14,7,16,16,7,15,16,16,15"

# 2nd box:

cd $DEMOS_ROOT/gaudi/hccl_test; HLS_ID=0

HCCL_COMM_ID=10.111.233.253:5555 python3 run_hccl_demo.py -clean --test

send_recv --nranks 24 --loop 1000 --node_id 1 --size 16m

--ranks_per_node 8 --ranks_list

"0,16,16,0,8,16,16,8,1,16,16,1,9,16,16,9,2,16,16,2,10,16,16,10,3,16,16,3,11,16,16,11,4,16,16,4,12,16,16,12,5,16,16,5,13,16,16,13,6,16,16,6,14,16,16,14,7,16,16,7,15,16,16,15"

# 3rd box:

cd $DEMOS_ROOT/gaudi/hccl_test; HLS_ID=0

HCCL_COMM_ID=10.111.233.253:5555 python3 run_hccl_demo.py -clean --test

send_recv --nranks 24 --loop 1000 --node_id 2 --size 16m

--ranks_per_node 8 --ranks_list

"0,16,16,0,8,16,16,8,1,16,16,1,9,16,16,9,2,16,16,2,10,16,16,10,3,16,16,3,11,16,16,11,4,16,16,4,12,16,16,12,5,16,16,5,13,16,16,13,6,16,16,6,14,16,16,14,7,16,16,7,15,16,16,15"

24:1 Incast Congestion¶

You can also take the extreme case of 24:1 congestion by extending the send_recv test.

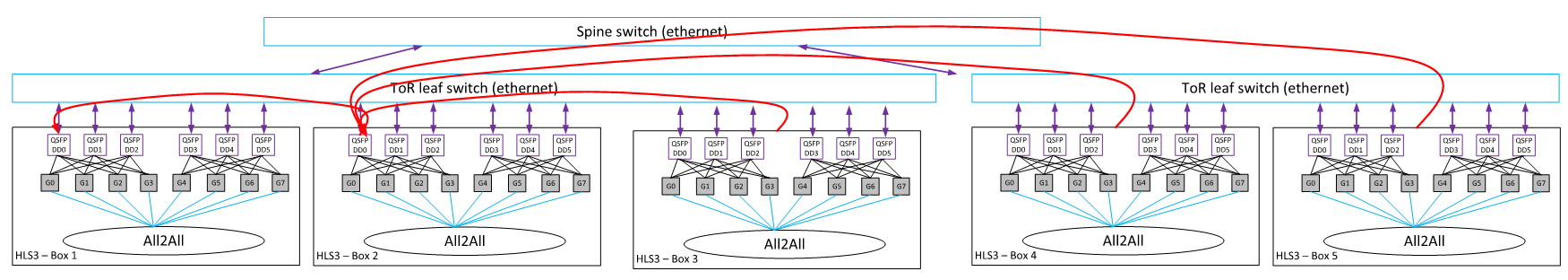

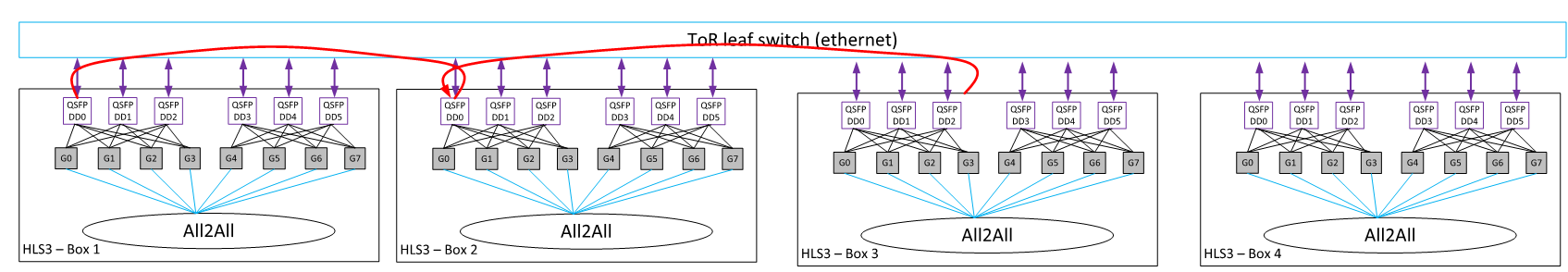

Multi Incast Congestion Within a Leaf Switch and Across Leaf/Spine Switches¶

We should also generate multi incast congestion test that can depict the following scenarios and ensure that the test gives reasonable performance without any packet drops. The following figures depict these scenarios:

Link Down Between Leaf and Spine¶

Given we have ECMP hash function in leaf and spine switches, ideally we should also be able to tolerate link failures in the spine switches and still be able to continue running the HCCL benchmarks on HLS-3 boxes. To do this experiment, we recommend that the administrator logs into one of the spine switches in use and deliberately DOWN the Ethernet interface. After disabling the link, you can execute HCCL_allreduce or HCCL_All2all benchmark. A drop in performance will be observed but the benchmark test should still continue to work. We do NOT expect the benchmark to fail because of a link failure.

To run this test, shut down the link in the spine switch as follows:

++++++++++++++++++++++++++++++++++++++++++++

2b25u25-temp-spine#configure

2b25u25-temp-spine(config)#interface ethernet 1/1-7

2b25u25-temp-spine(config-if-Et1/1,1/3,1/5,1/7)#shutdown

2b25u25-temp-spine#end

2b25u25-temp-spine#sh int status

+++++++++++++++++++++++++++++++++++++++++++++

Multiple Links Down Between Leaf and Spine¶

Please repeat the same experiment mentioned above placing multiple Ethernet interface links DOWN in the spine switch. We should continue to see that the HCCL benchmarks works but with a decrease in performance.