Intel Gaudi RDMA PerfTest Tool

On this Page

Intel Gaudi RDMA PerfTest Tool¶

This document provides guidelines for installing and running the Intel® Gaudi® RDMA PerfTest tool

habanalabs-perf-test on Gaudi accelerator. This tool is designed for

low-level, high-performance testing of connectivity through ping-pong, bandwidth, and latency

communication tests. It utilizes the Reliable Connection (RC) method and RDMA Write

operations to deliver performance measurements.

Note

The tool is only supported on Gaudi 3 and Gaudi 2, and can be used only with their NICs.

The tool can only be used in a container and is not supported on a VM.

Prerequisites¶

Make sure you have the following packages installed:

habanalabs-dkmshabanalabs-rdma-core

For more information about the packages installation, see Custom Driver and Software Installation.

Note

If you have upgraded to the 1.23.0 software version, the above packages are already included, and no additional installation is required.

Installation¶

Download the package files:

wget https://vault.habana.ai/artifactory/debian/noble/pool/main/h/habanalabs-perf-test/habanalabs-perf-test_1.23.0-695_amd64.deb

Install the package files:

sudo apt install ./habanalabs-perf-test_1.23.0-695_amd64.deb -y

Download the package files:

wget https://vault.habana.ai/artifactory/debian/jammy/pool/main/h/habanalabs-perf-test/habanalabs-perf-test_1.23.0-695_amd64.deb

Install the package files:

sudo apt install ./habanalabs-perf-test_1.23.0-695_amd64.deb -y

Download the package files:

wget https://vault.habana.ai/artifactory/rhel/9/9.4/habanalabs-perf-test-1.23.0-695.el9.x86_64.rpm

Install the package files:

sudo dnf install ./habanalabs-perf-test-1.23.0-695.el9.x86_64.rpm -y

Download the package files:

wget https://vault.habana.ai/artifactory/tencentos/3/3.1/habanalabs-perf-test-1.23.0-695.tl3.x86_64.rpm

Install the package files:

sudo dnf install ./habanalabs-perf-test-1.23.0-695.tl3.x86_64.rpm -y

Options and Usage¶

RDMA PerfTest tool is executed using cloud_run.py Python wrapper script. It runs across an entire data center, covering all pairwise permutations for thorough evaluation.

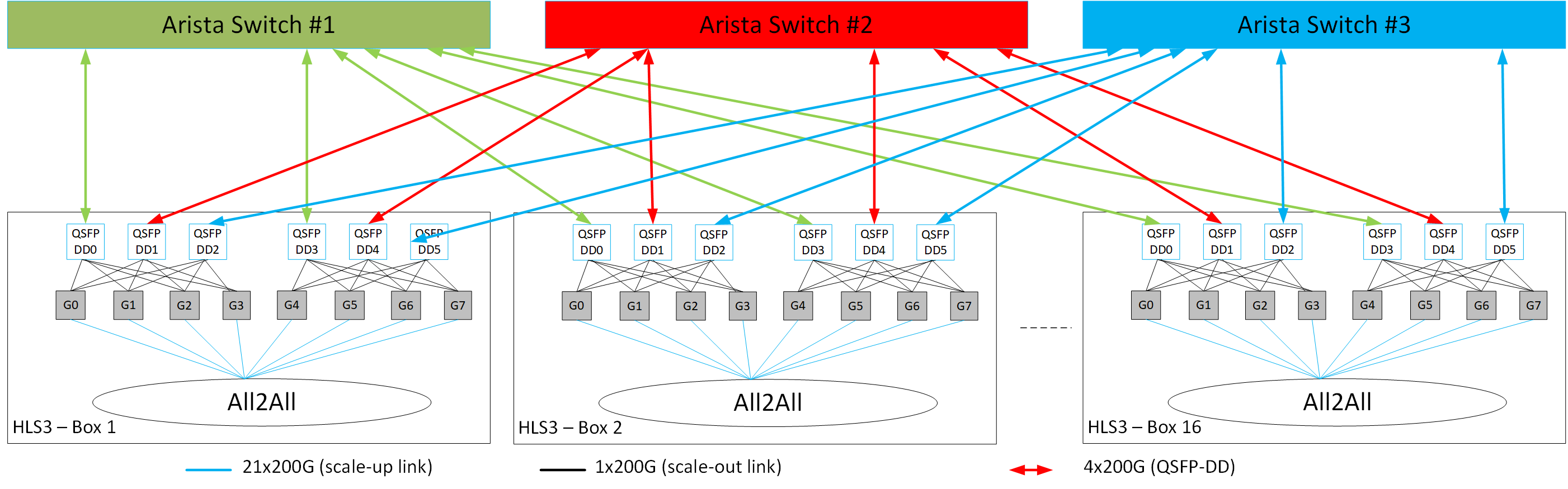

The following port connectivity options are supported for testing on multiple nodes:

Note

PerfTest tool supports L2 topology connectivity (two nodes with one switch).

Prerequisites¶

The below lists the prerequisites needed to run the cloud_run.py Python wrapper script:

Install the requirements file:

pip install -r requirements.txt

Make sure all tested nodes have SSH keys configured for seamless access. The script relies on SSH sessions established via SSH keys.

Note

Starting from 1.18.0 release, SSH host keys have been removed from Dockers. To add them, make sure to run

/usr/bin/ssh-keygen -Ainside the Docker container. If you are running on Kubernetes, make sure the SSH host keys are identical across all Docker containers. To achieve this, you can either build a new Docker image on top of Intel Gaudi Docker image by adding a new layerRUN /usr/bin/ssh-keygen -A, or externally mount the SSH host keys.Verify that all external NIC ports are active and accessible on each node. For more details, see Disable/Enable NICs.

Prepare a host file listing all tested nodes with their SSH connection details (IP and port). Use the following format: SSH-IP:SSH-PORT. See the example below:

kuku-kvm12-lake:22 kuku-kvm13-lake:22 kuku-kvm14-lake:22 kuku-kvm15-lake:22

Note

When using a hostname instead of IP address, make sure to use the FQDN format

hostname.domain; otherwise, the SSH connection might fail.Configure

config.shscript on for all tested nodes by implementing the following:Write the

LD_LIBRARY_PATHenvironment variable to a file. This ensures it can be accessed in remote SSH sessions during testing:echo "LD_LIBRARY_PATH=${LD_LIBRARY_PATH}" | tee ~/.ENV_SCALEUP

Create

gaudinet.jsonfile and configure it as described in the Generating a gaudinet.json Example section. Once done, set file the path by running the below:echo "GAUDINET_PATH=<PATH/TO/guadinet.json>" | tee -a ~/.ENV_SCALEUP

(

ping-pongandwrite_bwtests only) Createserver_internal_connectivity.csvfile and configure it as described in Internal Ports Testing. Once done, set the file path by running the below:echo "SERVER_INTERNAL_CONNECTIVITY_PATH=<PATH/TO/server_internal_connectivity.csv>" | tee -a ~/.ENV_SCALEUP

Apply the script for all tested nodes:

bash ./config.sh

Internal Ports Testing¶

Note

The internal ports testing is supported for ping-pong and write_bw tests only.

To perform testing on the internal ports, follow the steps below:

Review example configurations of the

server_internal_connectivity.csvfile in theinternal_datafolder. The example shows the internal NIC connectivity map tables. Verify if any of these configurations match your server setup. If a configuration matches, use it for your setup.If none of the examples match your configuration, create a new internal NIC port connectivity map in CSV format using the following template:

<source-device-module-id>,<source-port-number>,<destination-device-module-id>,<destination-port-number>

Note

In the CSV file, every module ID present on the server must appear in the source-device-module-id column. For example, if module OAM_i is connected to module OAM_j, the CSV should include the following:

i,0,j,1 j,1,i,0

Add the

--internalswitch afterperftestin the tool command line as shown in Test-specific Options.

Python Wrapper Options¶

Use the -h argument to view all options.

The table below describes all the cloud_run.py options available.

Option |

Description |

|---|---|

|

Shows the help message and exits |

|

Sets path to a host_file that includes a host IP list |

|

Sets SSH private key file path. This is optional. If

not set, the wrapper finds it automatically under

|

|

Saves all the log files in a specific path (the flag must be set) |

|

(Optional) Allows customizing the

|

PerfTest Options¶

Use the -h argument to view all options.

The table below describes all the perftest options available.

Option |

Description |

|---|---|

|

Shows the help message and exits |

|

Specifies the TCP port range script will use (default:1100) |

|

Enables internal NIC ports testing. Only supported with ping-pong and write_bw tests. |

|

Disables |

|

Enables testing between the corresponding ports across two systems. The ports are tested one by one in matching pairs. For example, port 0 with port 0, port 1 with port 1, etc. |

|

Tests all the internal routes between all existing Gaudis in the node. Only external NICs are tested. |

Test-specific Options¶

Use the -h argument to view all options.

The tables below describe all the testing options available.

Option |

Description |

|---|---|

|

Shows the help message and exits |

|

Sets size of message to exchange (default: 4096) |

|

Sets number of receives to post at a time (default: 128) |

|

Sets number of exchanges (default: 10) |

|

Validates received buffer |

Example:

python3 ./cloud_run.py --host_file ./hostfile --output /tmp/output perftest --internal --basic_check --tcp_port 1100 ping_pong --size 4096 --rx_depth 128 --iters 10 --chk

Option |

Description |

|---|---|

|

Shows the help message and exits |

|

Sets size of message to exchange (default: 1048576) |

|

Sets number of receives to post at a time (default: 128) |

|

Sets number of exchanges (default: 100000 for Gaudi 3, 50000 for Gaudi 2) |

|

Sets pass/fail criteria value for the test threshold in Gbps (default: not used) |

Example:

python3 ./cloud_run.py --host_file ./hostfile --output /tmp/output perftest --internal --basic_check --tcp_port 1100 write_bw --size 1048575 --rx_depth 128 --iters 100000

Option |

Description |

|---|---|

|

Shows the help message and exits |

|

Sets size of message to exchange (default: 1024) |

|

Sets number of receives to post at a time (default: 128) |

|

Sets number of exchanges (default: 500000) |

|

Sets pass/fail criteria value for the test threshold in ms (default: not used) |

Example:

python3 ./cloud_run.py --host_file ./hostfile --output /tmp/output perftest --basic_check --tcp_port 1100 write_lat --size 1024 --rx_depth 128 --iters 100000

Expected output:

* CloudReport_<timestamp>.txt - Tested nodes summary.

* <server_host_name>_<client_host_name>

└── scaleUpRepor_<timestamp>.txt - Specific server and client pair summary.

└── perftest

└── <network_ip>

└── <server - device (ib_dev)>

└── <device_port (ib_port)>

└── <client - device (ib_dev)>

└── <device_port (ib_port)>.txt - Both device application prints.

The output is saved in the output directory in a timestamp-named folder.